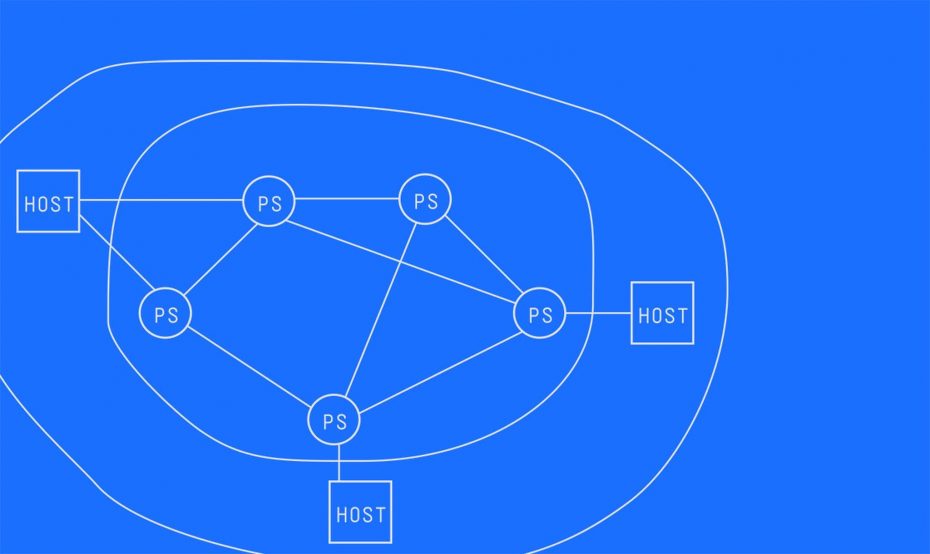

One of those consequences is inadvertent bias — prejudice for or against a group or person — making its way into the artificial intelligence algorithms.

“Bias can come up in a lot of different ways because our society is biased. The bias in society filters into the bias in data. The data then feeds the algorithm. Then we learn our algorithms based on the data,” Foulds said. “The ultimate effect is, potentially, there are unfair harms that happen to people because of AI systems.”

Emmanuel Johnson said the influence of societal bias isn’t often considered in technology. Johnson is a National Science Foundation Graduate Research Fellow pursuing a Ph.D. in Computer Science at the University of Southern California.

“Oftentimes, when we [use] an algorithm, we assume that if a machine gave us this answer, then it must be objective,” Johnson said. “But, in actuality, those results often aren’t objective.”

Information that may seem objective could have been derived from a bias data set with harmful results.

“We can think of some attributes as something we called a proxy variable,” Foulds said. “So, you can take away from your system the explicit attributes that you want to protect, like gender or race. You don’t want to make decisions based on that. Say somebody gets a loan because they’re male, or they’re white. You can delete those attributes from your system. But everything else is potentially correlated with those things.”

“For example, zip code is highly correlated with race and social class,” he added. “So, if you delete race and social class from your system and leave in zip code, then this apparently innocuous variable is going to allow your algorithm to discriminate.”

Cynthia Matuszek said designing with possible abuses of a technology in mind can help safeguard a tool against misuse and potential harm to users. Matuszek is an assistant professor of computer science and electrical engineering at the University of Maryland, Baltimore County.

“If you’ve always got that in your head — ‘I’m building a tool. What’s it good for? What’s it bad for?’ — You can really steer the direction that these developments are going in a meaningful way,” she said.

The experts noted it is crucial to design algorithms in an ethical way to protect against potential harms.

“If we don’t have ethics, we’re lost,” said Dr. José-Marie Griffiths. “We’re going to be driven to push for function — the next best app, next best version of a technology, and the money that it can generate — rather than doing good for mankind.”

Griffiths is president of Dakota State University in Madison, South Dakota, and a member of the newly-formed National Security Commission on Artificial Intelligence.

Ethical technology design can also be profitable, according to Kandyce Jackson, an attorney who works with AI investors and engineers on the legal implications of data activities and smart city infrastructure projects.

“There is the perspective that doing good is the right thing. Doing the ethical or moral thing is the right thing to do. We would like to assume that a lot of people want to do the good and moral thing,” Jackson said. “There is another side to come at that, that a lot of people just want to make money.”